The Earthquake and Tsunami large scale pilot exposes its workflow

In the LEXIS project, one of the objectives is to demonstrate running complex simulations under real-time deadlines, in order to get predictions in time for decision making. This is undertaken by combining tsunami inundation simulations and earthquake loss assessment, in an event-triggered workflow (an earthquake event) in a pilot of the project, called the Earthquake and Tsunami large scale pilot. This pilot has progressed well, and is now being opened up, especially to support the LEXIS Open Call initiative. Some of the results were also featured during the HPC for Urgent Computing workshop @ SC20, showing how common concerns are active in the HPC community.

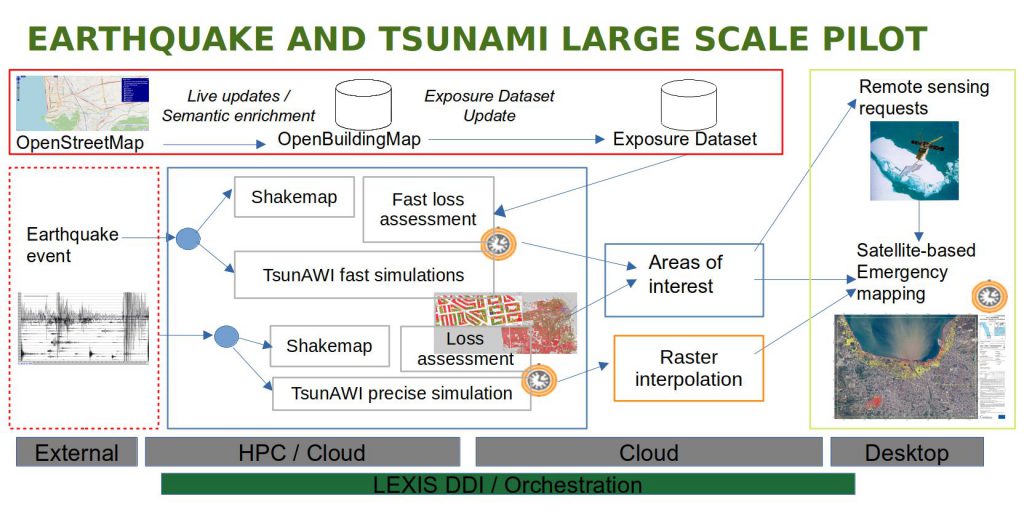

How does the pilot, shown in Figure 1, work? First, we need an up-to-date knowledge on possible victims and damage to structures, which is handled by a 24/7 flow around the OpenBuildingMap system. It is updated out of OpenStreetMap data, and feeds an exposure dataset, a fast, optimized structure providing access to the vulnerability of buildings around the world, including the people in those buildings.

The second element in the pilot is the event triggered part. It is triggered when an earthquake event happens, and has two branches: a fast path, and a precise path.

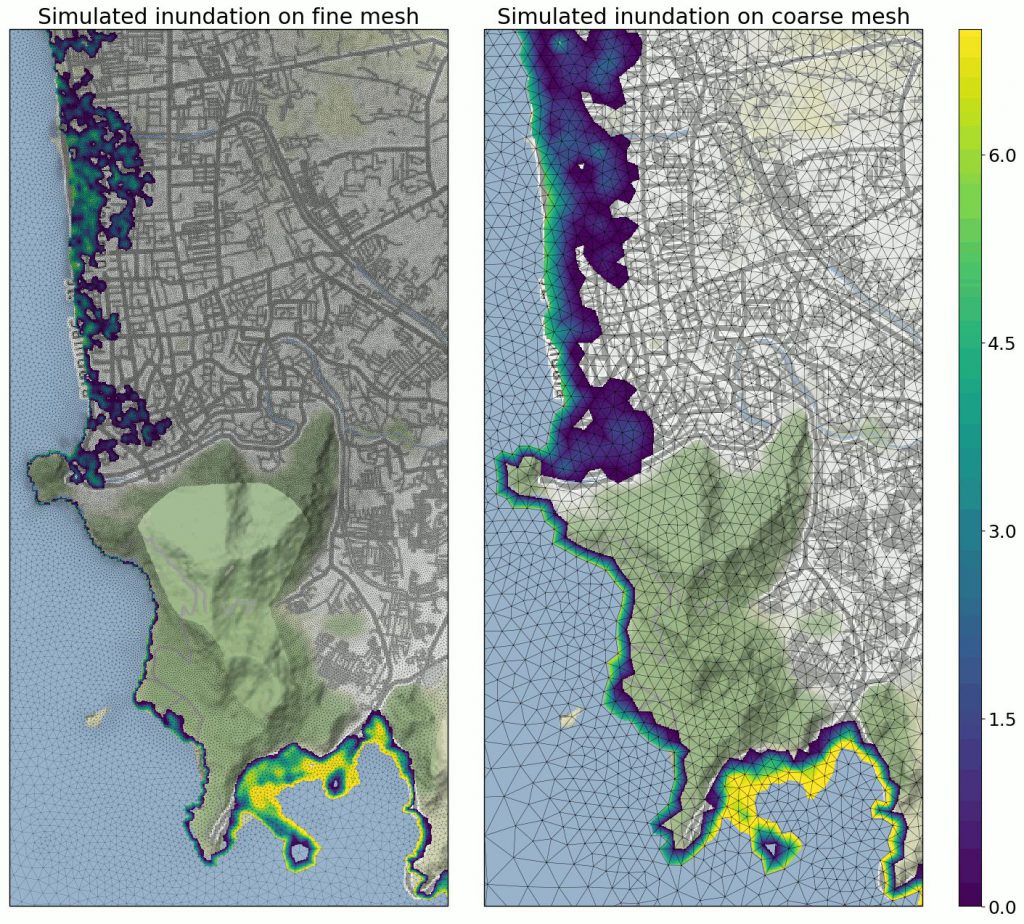

The fast path is triggered with the early earthquake event information, which initially is only the hypocenter: the earthquake starting location, depth and magnitude. When such an event occurs, it starts a LEXIS workflow that computes a shakemap, fast tsunami simulations with TsunAWI, and a fast (aggregate) loss assessment from the exposure dataset. The key result is that TsunAWI optimisations have allowed us to see very fast runs with inundation on a coarse mesh of the area (Figure 2) sixty times faster than the more precise one, reaching as low as 5 seconds of runtime.

The precise path is triggered a few minutes afterwards, upon the reception of the earthquake moment tensor information. This activates another, more precise shakemap generation, a detailed loss assessment, and a precise tsunami inundation simulation on a finer mesh. And again the jobs are triggered via the LEXIS orchestration tools.

The final part of this workflow is a satellite-based emergency mapping process, where remote sensing images are analyzed to produce fast damage assessment maps for affected areas (Izmir in Turkey is a recent example). A core component here is the procedure for the quick identification of potential area of interest by crossing event related datasets (i.e. shakemaps and inundation maps) with exposed assets, prioritizing them based on the potential impact. The fast identification of those areas based on objective criteria allows a more aware and solid satellite images acquisition process.

One of the key elements of the workflow is that interfaces are standardized and would allow for possible replacements and additional information, as well as using a core workflow infrastructure designed to intrude as little as possible into the working of individual elements of the flow. For example, more tsunami simulations could be added, providing inundation maps to the areas of interest determination procedures. Additional shakemaps could be generated by seismic simulations. Such additions could run on the LEXIS infrastructure via the Open Call mechanism, or be provided as urgent computing requests from other supercomputing centers. This sums up this blog post about the Earthquake and Tsunami large scale pilot. There are many more things to talk about in this pilot, linked to work that has already been completed and work that is underway, and I hope we’ll be able to share them in other blog posts.