Technical Documentation

WHAT DOES LEXIS PROVIDE FOR APPLICATION EXPERIMENTS?

LEXIS PORTAL & VISUALISATION

Web portal

The LEXIS Portal will act as the main entry point to LEXIS for users who are not experienced working with the LEXIS technology stack. Participants in the Open Call will be the first external users of this portal. The LEXIS Portal will provide functionality to interact with data sets (view public data sets, upload/download private data sets), request access to resources by creating new projects, use and run workflows on a mix of HPC and Cloud technologies as well as viewing usage and accounting information pertaining to use of the system resources.

As the LEXIS portal is still new, the LEXIS team members will work with the Open Call participants more closely to understand the usage of the LEXIS Portal (resource request, dataset and workflow management), taking feedback from the usage and user experience, ergonomy and, where possible, modifying the functionality of the LEXIS Portal as necessary.

Visualisation

The 3D remote visualization consists in giving the ability for the LEXIS user to smoothly interact with their 2D or 3D high-demanding graphical applications, with neither the need of acceleration (GPU) on their client host, nor the need to download large (to extremely large) datasets to view them. With such model, the data to be viewed remains on the cloud / server side as well as the 3D rendering power (GPU devices).

Remote visualization middle-ware is necessary to encode the 3D experience being rendered on the server side into a streaming system able to accept the mouse and keyboard inputs from the user, in a way that the user cannot really perceive the difference.

At last, the LEXIS portal will make this complex mechanism transparent for the user, provided that this middleware provides a RESTful application programming interface (REST API).

Use cases requiring such technology have been clearly identified for at least two of the existing pilots (for 3D interactive CFD simulation post-processing interactive encoding of weather forecast images into 2D videos). These use cases will be modelled in the orchestrator and stored in the related forge so that they can be reused in other/new projects.

TECHNICAL RESOURCES

Both at IT4I and at LRZ, large-scale HPC infrastructures in the Petaflop range (with HEAppE front-end) and Cloud infrastructures (IaaS OpenStack for running VMs) with together more than 3,000 CPU cores are accessible.

On the storage side, we provide >200TB of storage altogether without large bureaucratic barriers. Besides this, our Burst-Buffer systems can be used as a very quick intermediate data store in the multi-TB range, based on SSDs and NVRAM.

COMPUTING RESOURCES

European computing resources of world class level are made available through the LEXIS Open Call. The intended usage within the Open Call is an experimental usage with the focus on testing the LEXIS platform in order to validate and optimise the platform’s functionality. This means, that scientific production usage is generally not in the scope.

The new pilots can request a usage of the LEXIS platform within the following limits:

- Normalised core hours – minimum: 100,000 core hours

- Normalised core hours – maximum: 1,000,000 core hours

A definition of Normalised core hours (normalisation factors which relate the “raw” core hours to the normalised values on each computing facility within the LEXIS platform) will be published with the open call. This definition may be subject to minor adjustments in the course of the project.

LRZ compute cloud, LINUX cluster & SuperMUC-NG

At LRZ, LEXIS Customers have the access to HPC and Cloud-Computing systems (in shared usage mode with other users). The systems relevant for LEXIS are:

- LRZ Linux Cluster – about 1 PFlop/s, different segments (predominantly Intel Haswell CPUs), SLURM scheduler, parallel jobs with up to 1,792 cores on largest (Haswell-CPU-based) segment

- SuperMUC-NG – 26 PFlop/s, Intel Skylake, SLURM scheduler, OmniPath, jobs can scale up to 147,456 cores — access to machine via special proposal

- DGX-1 – 170 TFlop/s (FP16 Peak), NVIDIA P100 GPUs x 8, NVLink

- LRZ Compute Cloud – IaaS Cloud with >3000 Intel Skylake cores (and a few GPUs) for the user to dynamically start Virtual Machines (VMs). Typical VMs have 4 GB of RAM per CPU core and a disk space of 20 GB

For further documentation see:

- https://doku.lrz.de/display/PUBLIC/High+Performance+Computing

- https://doku.lrz.de/display/PUBLIC/Compute+Cloud

IT4I infrastructure & HPC clusters

The operational infrastructure at IT4I is the following:

- Barbora HPC cluster – 848 TFlop/s, Intel Cascade Lake, NVIDIA V100, InfiniBand HDR, PBS scheduler

- Salomon HPC cluster – 2011 TFlop/s, Intel Haswell, Xeon Phi, InfiniBand QDR, PBS scheduler

- DGX-2 – 520 PFlop/s (FP16), 130 TFlop/s (FP64), NVIDIA V100 GPUs x 16, NVLink

- Anselm HPC cluster (decommission in Q4 2020) – 94 TFlop/s, Intel Sandy Bridge, InfiniBand QDR, PBS scheduler

For further documentation see:

Furthermore, IT4I has procured an experimental infrastructure which will be used to operate a small OpenStack Cloud which will be provided to the call applicants as a resource.

TESEO SMART GATEWAY

The smart gateway is mainly composed of three parts:

- Data receiving module,

- Data elaboration,

- Data sharing.

Data receiving modules, based on a wireless LoRa module and on a RS485 wired link, are able to collect the data from the different sensor nodes in the network. The core of the data elaboration part is an STM32 CPU based on an ARM CORTEX M7, and the main operations are data collection pre-processing and submission. The data sharing part is devoted to the data parsing & formatting and data upload to the cloud, this part currently can be done via 3 different ways:

- WiFi connection,

- LAN connection,

- Narrow band connection.

The smart gateway in LEXIS provides a platform able to collect and send the data to a central database for the use of LEXIS weather and climate pilot. For the Open call, the smart gateway can easily collect and send the data as actually is doing for the weather and climate pilot where the smart gateway is collecting several data as is temperature, humidity, pressure, wind speed, wind direction and precipitation intensity; these data are pre-validated directly by the smart gateway and shared via TCP/IP protocol to an external database which than can be exploited for further services.

TESEO expects to broaden the LEXIS project know-how thanks to additional real users´ experience using the smart gateway, so hard data from the field.

When required by the selected Applicant, TESEO will make available up to 10 Smart Gateways for the Open Call.

DATASETS

The LEXIS platform supports both the concept of public and private datasets, meaning that all datasets can be protected with authentication and authorization mechanisms preventing from unwanted access, sensitive data exposure or data leakage.

As a matter of fact, the datasets can also be shared by LEXIS users or made publicly available on the LEXIS platform.

It is worth mentioning that results are considered as regular datasets and follow the same rules to ensure both use case:

- Privacy of the results

- Easy sharing results

The LEXIS Team will support the Open-Call applicants with the efficient use of external datasets they need as an input for their scientific and industrial workflows. Such datasets, including datasets not yet mentioned in the Pilots, will be made available in the DDI (or, if agreed on, also in the WCDA). Ideally, to this purpose, EUDAT staging (B2STAGE), synchronisation and federation (B2SAFE) mechanisms will be used.

In addition, suitable datasets already available on EUDAT systems can be found via the B2FIND portal. The user can then upload these datasets to the DDI using the appropriate APIs.

Once in the DDI, data are subject to the convenient staging mechanisms (DDI Staging API) within the orchestrated LEXIS workflows. An overview of them is provided within the LEXIS Portal.

As far as possible, applicants within the Open Call are expected to communicate their demands for external datasets at application time.

DATA MANAGEMENT & PUBLICATION OF RESULTS

The LEXIS DDI (and, as far as agreed on, the WCDA) can be used to store input, intermediate and output data of a new Pilot. A regular storage quota of 5 TB for persistent storage of a project is proposed, while up to 20 TB can be provided on negotiation (depending on availability). We note that the HPC systems in LEXIS are equipped with temporary SCRATCH storage in the range of 100s of TB per user, which can be used according to the regulations for the respective HPC system. Each project shall indicate expected storage volumes for input, output, and intermediate data in their application (response to the Open Call).

The data flow within the workflows, i.e. the provisioning of input data and the de-provisioning, copying or archiving of output data, is to be implemented via calls to the DDI APIs (mostly the staging HTTP-REST API) and the WCDA, which are normally receiving their calls directly from LEXIS workflow management.

Once output data is archived within the DDI or the WCDA, the Data Management Approach of LEXIS includes the possibility to assign persistent identifiers (B2HANDLE PIDs, DOIs) as far as interesting and feasible. This requires an obligatory provision of basic metadata (oriented mostly at the DataCite/Dublin Core standards) which may be enforced already at early stages of workflow definition. Clearly, the user having to do this is provided support by the LEXIS team.

Via the connection to EUDAT and its intrinsic geographically-distributed nature, the DDI is able to offer redundant storage, and data find-ability via the EUDAT Collaborative Data Infrastructure (B2FIND) – if the user would like his data to be public.

All the datasets (meaning input data, results, etc..) are handled from data lifecycle perspective, meaning for instance that all intermediate results are properly deleted when actively required or not being used anymore. Any LEXIS user has full control of its datasets during its lifecycle. In case some datasets are shared among LEXIS users by replication, then the replicated datasets is considered as uniq entity and has its own lifecycle and security policy.

SPECIFIC WORKFLOWS

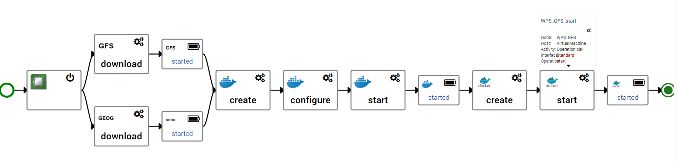

The LEXIS orchestration service will enable the management of complex workflows represented by Directed acyclic graph, including:

- Cross computing between distributed HPC and Cloud infrastructures (hybrid workflow),

- Urgent computing-oriented workflows,

- Failover enabled workflows,

- Data aware workflows (including DDI operations).

A catalogue of workflows templates based on the LEXIS Pilots will be made available. It provides examples of typical workflows addressed by the project. The intent is that Open call participants will be able to directly use or customize these workflows to implement their use cases.

Security & Confidentiality

While building the LEXIS Platform, the following concerns were the main drivers for taking into account security very seriously since the co-design:

- Data security during data life-cycle including authenticated and authorized access and public access as well. This results in the fact that all Data (input datasets and output results) can be private or not necessarily public: LEXIS platform is able to handle “sensitive data” from customers and ensure end to end “Data Security” (in-transit),

- All source code (mainly algorithm) are following the main data security concept as they can be private or public: LEXIS platform allows to protect customer IP, by following Data Protection principles to protect source code of algorithm.

The LEXIS Platform has been built following best practice in the modern industry such as:

- Security by Design,

- Zero trust model

- Principles of least privileges

- Defense in Depth

- Separation of duties

- Attack surface minimization, Keeping security simple and ensuring secure defaults.

SPECIFIC WORKFLOWS

BASED ON PILOT FOR AERONAUTICS

Datasets

Because the software suite used within the Aeronautics pilot is either proprietary or commercial this software could not be used within the Open Call. For this reason, we prepared publicly available dataset for Computational Fluid Dynamic (CFD) simulation exploiting LEXIS technologies which will leverage on open-source CFD code. This data set will demonstrate the huge potential of LEXIS platform for CFD simulations and since the intention of the Open Call is to target a broad community, the provided data set has been prepared by using widely used open-source CFD code OpenFOAM. This will allow users in Open Call to test prepared workflows and LEXIS technologies for their own applications.

The test case provided in OpenFOAM format is the well-known benchmark of T106C turbine profile tested on a linear cascade experimental rig. Provided dataset contains not only geometrical description of the blade in term of the coordinates, but inlet boundary conditions such as total pressure, temperature and flow angle, outlet boundary conditions such as static pressure but also all necessary OpenFOAM files.

By providing OpenFOAM files, this dataset is ready-to-run on the LEXIS platform which makes it easy for user to get familiar with the LEXIS technologies and as such use easily the LEXIS platform test bed for CFD simulations.

SPECIFIC WORKFLOWS

BASED ON PILOT FOR EARTHQUAKE & TSUNAMI

Datasets

The earthquake and tsunami datasets will be prepared around the three scenarios planned for the evaluation of the pilot, that is (i) the Padang earthquake and tsunami fictional event, (ii) the Chile 2015 earthquake and tsunami and (iii) the Nepal 2015 earthquake events. Each dataset will include meshes for the tsunami simulations, the parameters for the event (a QuakeML description file) and the exposure dataset subset, at a pre-defined resolution. The data will be in the formats used by the various tools of the pilot workflow, that is mesh elements and nodes for TsunAWI, and OpenStreetMap subsets for the exposure dataset.

A specific OpenStreetMap-based dataset and update set will be made available, to allow external participants to bench the update process of OpenBuildingMap.

SPECIFIC WORKFLOWS

BASED ON PILOT FOR WEATHER & CLIMATE

Datasets

Weather and climate pilot

A large portion of the data relevant to the weather and climate pilot is proprietary model output, used at an intermediate stage of the pilot workflows, or third-party observations (e.g. Italian Civil Protection radar and weather stations observational data, Weather Underground personal weather stations) used as inputs, which cannot be shared publicly.

However, the weather and climate pilot will make the outcomes of its workflows publicly available where possible. This includes in particular the meteorological model WRF, Continuum hydrological model, RISICO forest fire risk model, ERDS model results, and NUM urban model results.

These results will be available for selected case studies in Italy and France over selected periods. The modelling output will be mainly provided in NETCDF format. Furthermore, the main modelling tasks of the Weather and Climate pilot will adopt ad hoc virtualization techniques based on dockers and virtual machines.

These virtualization approaches will allow the formulation of simplified modelling workflows to be demonstrated to external users to get familiar with the LEXIS technologies and to address possible approaches (portal, GUIs etc) to make easier for external actors the formulation of Weather and Climate experiments.

Urban air-quality dataset

In the framework of the weather and climate pilot, urban air-quality simulations over the whole Paris are produced for a set of specific dates (year 2018). Note that the software used to produce this dataset is commercial and so cannot be used within the Open Call.

This dataset consists of hourly time-series of 2D air-quality concentrations of NO2 and PM10 at ground level. The spatial resolution is irregular with 1 m resolution close to main sources (road network, industrial sources) up to 100m resolution far away. The output points are around 1,000,000 and cover a domain of about 28 km x 28 km over Paris.

The format of the dataset is NetCDF, standardized for the WCDA exchange API inside the LEXIS platform.

The dataset is freely usable by the new partners of the open call when it is an usage only inside the LEXIS platform for research or demonstration activities. No (direct or indirect) commercial use of this dataset is allowed.

Execution of hydro-meteorological forecasting workflows will be based on the following services:

- WRF model will be executed on HPC systems (LRZ and IT4I)

- Containers and virtual machines will be executed on cloud computing

- Workflows will be executed by the LEXIS Orchestration System based on TOSCA technology

SPECIFIC WORKFLOWS for WEATHER & CLIMATE

Two categories of workflows are envisaged:

- Exploiting pre-existing workflows to be used over different dates and areas

- Exploiting WRF simulations, including data assimilation, and passing the results to external models to be executed on external premises